I am okay at drawing. That means: I am probably better at drawing than

a random person walking down the street but I'm far, far behind the type

of artists that regularly post in Instagram. I am also mostly self-taught:

I took some initial lessons via mail from the well-known (at the time)

Modern Schools,

gave up for a couple years, and picked it back up in my late teens when

I needed something to do besides programming and not having friends.

Some of my drawings have been published,

and one in particular has been stolen countless time by people who

thinks copying things from the internet without attribution is fine.

I was recently asked what I would recommend to someone who wants to

learn how to draw. This question took me by surprise for two reasons: one,

because I was never asked this before, and two, because my answer was

surprisingly useless even to me:

Any tutorial you find online will give you the right steps. But you'll

only understand them after you already know how to draw.

This is a pointless answer, which also happens to be 100% correct.

This post is my attempt at giving a slightly clearer answer, explaining

why anyone would think that my advice makes sense and hopefully give

beginners some good points on where to start.

Note 1: this post contains links to drawings of naked people. If you are

not comfortable with drawn nudity, you should probably not follow the links

and definitely reconsider whether figure drawing is good for you.

The boring advice

All drawing is, at its core, more or less the same. Whether you are

interested into realistic drawing, comic drawing, manga drawing (a term

I hate), webcomics or editorial cartoons, the art of representing human

figures in 2D is based on 90% the same rules. Sure, US comics have more

muscles and japanese manga characters have no nose, but the fundamentals

are the same. A typical drawing curriculum should include:

- How to sketch a human figure. This guide

is relatively good, while this one

sucks for reasons I'll explain later on. If you've seen those wooden

figures,

they are useful for getting the hang of this step.

- The proportions of the human body. More specifically,

this guide

on how many heads you need to draw a full body.

- The proportions of the face. This is annoying enough that it often

warants a section on its own.

- How perspective works. One, two, and three points perspective are

the typical ones.

- How shadows work. Getting it perfect will take a long time, but

"dark part is dark" will get you far with little effort.

Once you reach this point, you can either start learning about muscles

and improve your anatomy (have you ever stopped to think about how weird

knees look?), or become a caricaturist and call it a day.

The number of books and tutorials out there convering all these points is

virtually infinite, and therefore any book you choose it's going to be

probably fine. If you want some more specific advice, multiple generations of artists

have learned with Andrew Loomis' books, which are freely available on

the Internet Archive. You should start with Figure drawing for all it's worth,

follow up with Drawing the head and hands,

and fresh up your perspective with the first half of Successful drawing.

Practical advice I: Keep drawing

There are two extra pieces of advice worth discussing.

The standard advice says "keep drawing until you become good at it",

which is technically true but only barely. The full, honest version

should say:

Start with one drawing. It will suck, and that's fine. Once you're

finished, look at it objectively and enumerate its defects. For

your next drawing, focus on solving those defects. Repeat until

you consider yourself good enough1.

In other terms: you can draw circles all day and all night

for years, but that won't make you any better at drawing squares. If you

want to get better at drawing, you first need to be aware of what's

there to improve.

That doesn't mean that you can't be happy about something you just drew.

Few things are as rewarding as putting your art supplies to the side,

looking at your drawing, and admiring something knowing that you made

it. All I'm saying is: you need to know what your blind spots are. If

you are like me and your eyes are always sliiiiightly out of alignment,

it is perfectly fine to still be happy about that portrait you just made.

But if you are not honest and accept that yes, that one eye looks weird,

then you will never learn how to fix it2.

Practical advice II: Copy other people

As the quote goes,

"Good artists copy, great artists steal".

Therefore, it is your duty as aspiring artist to copy as much as you can.

Most self-taught artists I know started the same way, copying drawings

over and over until they felt comfortable enough to start doing their own.

My suggestion: find an artist you like. Pick one of their drawings and

copy it. Add the final work to your sketch folder. Repeat. This exercise

serves several purposes:

- It will improve your pencil grip, make your lines stronger, and

improve your technique overall.

- It allows you to focus on a sub-part of the problem (drawing a figure)

without having to worry about the complicated stuff - you don't need

to think about perspective, shadows or posture because the artist

already did it for you.

- It helps you to build your personal portfolio. It will help you visualize

your progress, and gives you something to brag about whenever someone

learns you are drawing and asks you to see something you've done.

Plus, it's not like you wanted to throw those drawings away, right?

- It will help you answer questions you didn't know you had. Do you

want to know how to draw a feminine-looking nose? Copy one of

Phil Noto's illustrations. Would you like to

know how does a professional go from zero to done? You can watch

professionals like Jim Lee do a couple pieces in real time

online and even explain their

process as they go. Are you wondering how much attention to pay to

clothes and background? Once you notice that classical painters

couldn't care less about whatever is below your shoulders,

maybe you won't lose your sleep about it either.

Eventually, you'll start noticing that different artists have different

skills to offer. Maybe that guy draws cool hands, that other artist

draws clothes very well, and that third other one has very expressive

faces. Copying their work helps you understand the tricks they are

using, and adding them to your repertoire helps you develop your own style.

Rest of the owl

The final point is both super important and really difficult to explain

to beginners.

Are you familiar with the

how to draw an owl meme?

This picture is very popular in amateur art circles because it goes

straight to the core issue: that most tutorials will take your hand and

guide you step-by-step, but then they will let go at a critical step and

you'll fall down a metaphorical cliff.

The root of the problem, I think, is that one step where the book

tells you to "do what feels natural" or to "just keep going". What these

people forget, however, is that learning what feels natural takes a lot

of practice!

This tutorial

I mentioned above is as bad as it gets: the instructions

tell you to "Draw some vertical and horizontal lines to plan your

drawing", which is completely useless advice that only makes sense once

you know which lines to draw and where. Whoever wrote that guide has

forgotten what it was to be a beginner, and their advice is really not

helping.

When that happens, you have two choices. You can look for a better

tutorial, or you can keep going, and see how far you make it. There is no

shame in trying and failing, and who knows? maybe you'll still make it.

Truth be told, there is a point at which no tutorial can help you and all

that's left for you to do is to just draw. But that only applies for

specific, advanced tutorials. It is the sad truth that, as a beginner,

you will often recognize bad tutorials only once you are stuck in them.

Nobody said the life of an artist was easy.

Closing remarks

This guide ended up being longer than I intended, and half as long as

it should be. That's always going to be a problem: the average artist

does not let structure get in the way of their vision, and

any attempt at a "formal" answer will stop halfway (as I have complained

before).

That said, if you would still appreciate a more structured approach, I have

heard good things about Betty Edward's book

Drawing on the right side of the brain.

And finally: have fun. All of this advice is useful for when you want to get

objectively better, but there's a lot to be said in favor of simply drawing

because you enjoy it.

Happy drawing!

Footnotes

-

Fair warning: in my experience, most artists never feel that they are

"good enough". This is a well-known bug of art.

-

I believe the process of "find defect, correct defect, repeat" is

why most artists I know are never happy about their work.

Seriously, go to an artist and tell them you like a particular

drawing of them - there's a good chance that they'll give some excuse

for why the drawing sucks.

I have been giving programming languages a lot of thought recently.

And it has ocurred to me that the reason why (reportedly) lots of people

fail at learning how to program is because they are introduced to it at

entirely the wrong level.

If you as a beginner search "Python tutorial" right now, you will get

lots of very detailed, completely correct, very polished tutorials that

will teach you how to program in Python, but that will not teach you

how to program. Conversely, if you search for "how to program", the

first results will be either completely useless advice such as "decide

what you would like to do with your programming knowledge" or they ask

you to choose a programming language. You might choose Python, in which

case you are now back to square one.

One of the founding principles of my field is that "Computer Science is

no more about computers than astronomy is about telescopes". In other

words, programming is a skill that it's expressed typicall using

programming languages, but it's not exclusively about them. And

programming, the skill underlying all of these programming languages,

is hard.

To be a good programmer, you need to master three related skills:

- Understand how to convert messy real-life problems into a clearer

version with less ambiguities.

- Understand what the best practical approach to this problem is, and

choose one as a possible solution.

- Understand how to use programming languages and data structures to

implement that solution.

Mastering the first skill requires an analytical mind, and in particular

forces you to see the world in a different way. If someone asks you for

a program to keep track of how many people are inside a room, you need

to stop thinking in terms of people and rooms and think in terms of

numbers and averages. You also need to account for badly-defined situations:

if a woman gives birth inside the room, is your solution good enough to

increase the room count by one?

This part alone is quite hard. Some people make a living out of it as

software requirements engineers, meeting with clients and discussing

some approaches that would make sense. It also requires at least a

surface level understanding of the type of solutions that one could

realistically employ. If you ever wondered why your high-school math

teacher always asked you to turn apples and trains into equations and

solving for x, well, this is why: they were teaching you how to

solve real-world problems with simpler methods.

In order to master the second skill "choose a viable solution", you

need to read a lot about which problems are easy and which ones are hard.

There are some problems that a programmer solves daily, and some problems

for which the best known solution would still take thousands of years.

If you think that finding new solutions to problems is interesting, I

encourage you to go knock at the Math department of your nearest

university. They do this for a living, and will be very excited to have

you around.

Finally, the third and last skill "implement a solution" requires

you to write it down in a way that computers can understand.

Half the job requires understanding common concepts for structuring programs

(variables, databases, data structures, networks, and so on), and the other half

requires learning the syntax of your preferred programming language.

And here we reach the core of this post's answer. If you type

"Python tutorial" right now, what you'll get are very detailed guides on

how to acquire the second half of the third skill, also known as "the

unimportant one". Sure, programmers love discussing which programming

language is better and how not to write code,

but here's a little secret: in the larger scale of things, it rarely

matters. Some programming languages are better suited for specific

tasks, true, but the best programming language is not going to be of

any help if you don't know what you are trying to build.

At its core, programming is learning how to solve problems with a

specific set of tools. And while you do need to understand how to use

those tools, they are completely useless if no one explains to you how

to solve problems with them.

If knowing how to use a pen doesn't make you a writer, and

knowing how to use a wrench doesn't make you a mechanic, teaching you a

programming language and expecting you to become a programmer overnight

is just going to leave you confused and frustrated. But remember: it's

not you, it's them.

Appendix I: what does problem solving looks like?

Let's say someone asks me to "write a program to know who I have been

in contact with in any given day", a problem known as

contact tracing

that has been in the news in the past weeks. How would the skills above

come into play?

(Note: for the sake of simplicity, I am going to solve this problem

badly. It's a toy example, so don't @ me!)

The first step is to model this situation in a formal, more structured

way. Real people are difficult to work with, but luckily

we don't care about most things that make them human - all we care about

is where they have been at any point in time. Therefore, we replace

those real people with "points", keep track of their GPS coordinates at

all times, and throw all of their remaining attributes away.

We have now turned our problem into "tell me which GPS coordinates

(i.e., points) have been close to my GPS coordinates at any given

time". We can simplify the problem further by defining what

"close to me" means, and we turn the problem into "give me a list of

points that have been 1 meter or closer to me at any given time".

Next, we need to find a way to efficiently identify which points have

been close enough to our coordinates. Since there is a lot of people

in the world, we start by crudely removing all points that are more than

10km. away from me - this can be done very quickly, and it probably won't

affect our results too badly.

We now need to refine our search, and therefore we take a dive

into the geometry literature. After a quick look, I decided that building a

Quadtree is the best solution

for what I want to build. Note that I only have a passing knowledge of

what Quadtrees are, but that's fine: once I have a hint of where the

solution might be, I can search further and learn the details as I go.

And finally we get down to writing code. If our programming language

doesn't already include an implementation of a Quadtree data structure,

we might have to do it ourselves. If we choose Python, for instance, we

need to understand how to create a class, how to use lists of objects,

and all those other implementation details that our Python tutorial has

taught us. Similarly, storing the list of points will probably require

a database. Each database has a different strong point but, as I said

earlier, knowing which database to use is not as important as knowing

that some database is the right tool.

Let's now picture the same exercise in revers: imagine I come to you and

say "Write me a program to know who I have been in contact with in any

given day. Here's a guide on how to use Quadtrees in Python".

You wouldn't find that last bit of any use, would you?

As many other people around my age, I learned programming from a book. In

particular, I started programming with a 3-books series called Computación para

niños (Computers for kids). Volume 3 was dedicated to programming in BASIC, and

it opened the door to what is now both my profession and hobby.

That said, that book was also the source of a 25-ish-years-long frustration, and

that story is the point of today's article.

In the ancient times known as "the 90s", it was still common to get printed code

for games that you had to to type yourself. This book, in particular, included a

game called "Lunar rocket" in which you were supposed to (surprise!) land a

rocket on the moon. For context, this is how the game was sold to me:

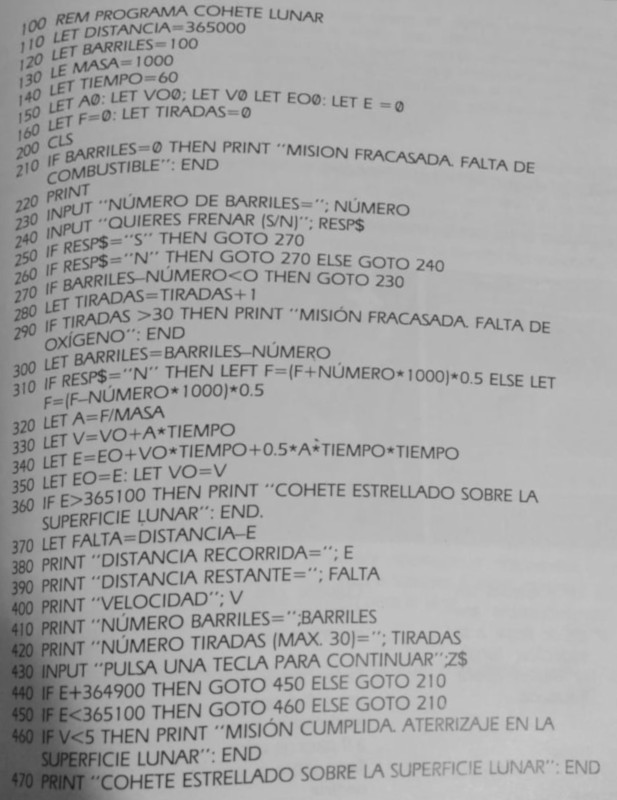

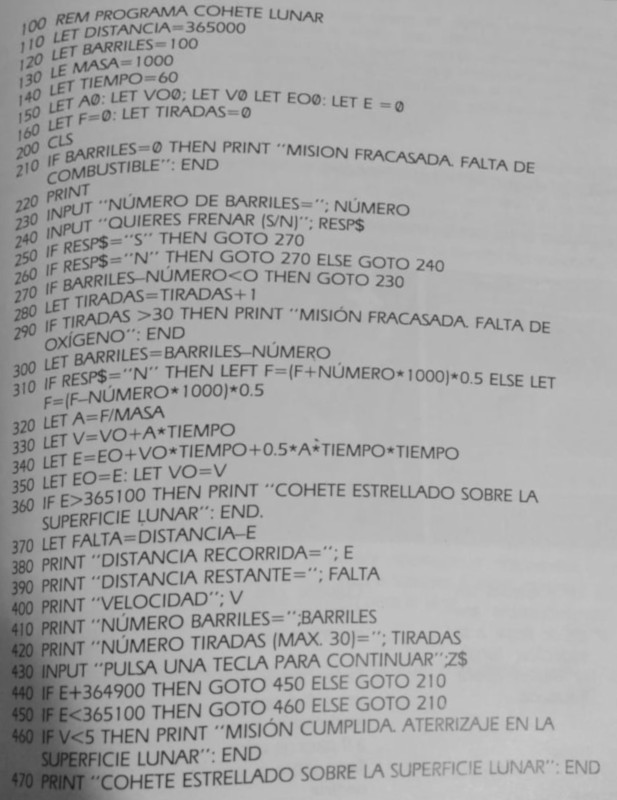

And this is what the code looks like:

Suffice to say, the program never worked and I couldn't understand why. I spent

weeks trying to tweak it to no avail, getting different errors but never making

a full run. And no matter how hard I tried, I could never get a single picture

to show on screen. Eventually I gave up, but the weeks I spent trying to

understand what I did wrong have been on my mind ever since.

That is, until last Sunday, when I realized that I should go back to this

program and establish, once and for all, whose fault all of this was.

Problem number one was that the book shows pretty pictures, but the program is

text-only. That one is on me, but only partially. Sure, it was too optimistic of

me to expect any kind of graphics from such a short code. But I distinctly

remember giving it the benefit of the doubt, and thinking "the pictures are

probably included with BASIC, and one or two of these lines are loading them".

That was dead wrong, but I'll argue that young me at least had half of a good

idea. A few years later I would learn that the artwork and the game rarely had

anything to do with each other, a problem that

has not entirely gone away.

Now, problem number two... That code I showed above would never, ever work.

My current best guess is that someone wrote it in a rush leaving some bugs in,

and someone else typed it and introduced a lot more. In no particular order,

- Syntax errors: Line 130 has a typo, the variable name "NÚMERO" is invalid

because of the accent, and line 150 is plain wrong. The code also uses ";"

to write multiple instructions in a single line, but as far as I know that's

not valid BASIC syntax.

- The typist sometimes confuses "0" with "O" and ":" with ";" and " ". This

introduced bugs on its own. Line 150 (again) shows all mistakes at once.

- Error handling is a mess: if you enter the wrong input, you are simply asked

to enter it again. No notification of any kind that you did something wrong.

- The logic itself is very convoluted. GOTOs everywhere. Line 440 is

particularly bad, and could be easily improved.

- Some of the syntax may be valid, but it was definitely not valid in my

version of Basic. And seeing how my interpreter came included with the book,

I feel justified in not taking the blame for that one.

And so I set out to get this to run once and for all. The following listing

shows what a short rewrite looks like:

LET DISTANCE=365000

LET BARRELS=100

LET MASS=1000

LET TIME=60

LET A0=0

LET VO0=0

LET V0=0

LET EO0=0

LET E=0

LET F=0

LET THROWS=0

DO WHILE BARRELS>0 AND E<=364900 AND THROWS <= 30

LET REMAINING=DISTANCE-E

PRINT "DISTANCE SO FAR="; E

PRINT "DISTANCE TO GO="; REMAINING

PRINT "SPEED"; V

PRINT "BARRELS LEFT="; BARRELS

PRINT "THROWS LEFT(MAX 30)="; THROWS

PRINT "-----"

INPUT "NUMBER OF BARRELS?"; NUMBER

INPUT "DO YOU WANT TO BRAKE (Y/N)"; RESP$

IF NUMBER>BARRELS THEN

PRINT "NOT ENOUGH BARRELS!"

ELSEIF RESP$ <> "Y" AND RESP$ <> "N" THEN

PRINT "INVALID INPUT!"

ELSE

LET THROWS=THROWS+1

LET BARRELS=BARRELS-NUMBER

IF RESP$="N" THEN

LET F=(F+NUMBER*1000)*0.5

ELSE

LET F=(F-NUMBER*1000)*0.5

END IF

LET A=F/MASS

LET V=VO+A*TIME

LET E=EO+VO*TIME+0.5*A*TIME*TIME

LET EO=E

LET VO=V

END IF

LOOP

IF BARRELS <= 0 THEN PRINT "MISSION FAILED - NOT ENOUGH FUEL"

IF THROWS > 30 THEN PRINT "MISSION FAILED - NOT ENOUGH OXYGEN"

IF E>364900 THEN

IF V<5 THEN

PRINT "MISSION ACCOMPLISHED. ROCKET LANDED ON THE MOON."

ELSE

PRINT "MISSION FAILED. ROCKET CRASHED AGAINST THE MOON SURFACE."

END IF

END IF

I think this version is much better for beginners. The code now

runs in a loop with three clearly-defined stages (showing information, input

validation, and game status update), making it easier to reason about it.

And now that the GOTOs are gone, so are the line numbers. However, and in order

to keep that old-time charm, I kept all strings in uppercase and added no

comments whatsoever.

I also added some input validation: the BASIC interpreter I'm

using (Bywater Basic) will

still crash if you enter a letter when a number is expected, but that's outside

what I can fix. At least you now get a message when you use too many barrels

and/or you choose other than "Y" or "N".

It is only fair to point out something that I do like about the original code:

that the variable names are descriptive, and in particular that the physics

equations use the proper terms. If you are familiar with the physics involved

here, those equations will jump at you immediately.

If I had time, I would still tie a couple loose ends in my version.

A proper rewrite would ensure that the new code behaves exactly like the old

one, bugs and all. And there's a good chance that I have introduced some new

bugs too, given that I barely tested it. I also feel like making a graphical

version, using the original artwork and adding some simple animations on top.

But even then, I finally feel vindicated knowing that younger me had no chance

of making this work. Even better: the next exercise, a car race game, just gave

you a couple pointers on how to draw something on the screen, and then left you

on your own. That one would take me some time today.

Next on my list: finally read the source code of

Gorilla.bas. I know I

tried really hard to understand it when I was 10, so maybe I should get

closure for that one too.

Once again, and as seen on

this video,

a Tesla car driving alone on its lane on a clear day runs straight into

a 100% visible, giant overturned truck. I say "once again" because Tesla had

already made the news in 2018

when one of its self-driving cars ran into a stopped firetruck.

This is not an unknown bug - this is by design. As Wired

reported back then,

the Tesla manual itself reads:

Traffic-Aware Cruise Control cannot detect all objects and may not

brake/decelerate for stationary vehicles, especially in situations when you

are driving over 50 mph (80 km/h) and a vehicle you are following moves out

of your driving path and a stationary vehicle or object is in front of you instead.

The theory, as it goes, is that a giant stationary truck stopped in the middle

of a highway is so an unlikely event that the system considers it a

misclassification and ignores it. If your car is a 2018 Volvo, it may even

accelerate.

There is a popular argument that often surfaces when people try to point out

how completely ridiculous this situation is: that the driver should always be

alert, and that they should be prepared to take control of the vehicle at any

time. And if your car is equipped with "lane assist", then that's fine: the

name of the feature itself is telling you that the technology is only there to

ensure that you stay in your lane, and anything else that might happen is your

responsibility.

But when your promotional materials have big, bold letters with the words

"Autopilot" and your promotional video shows a man prominently resting his

hands on his legs, you cannot hide behind a

single sentence saying "Current Autopilot features require active driver

supervision and do not make the vehicle autonomous". Why? First, because we

both know this is a lie - if you weren't intending on deceiving people into

believing their car is autonomous, you wouldn't have called the feature

"Autopilot" and you wouldn't have made such a video. Second, and more important,

virtually the entire literature on attention will tell you that the driver will

not be in the right state of mind to make a split-second decision out of the

blue. No one believes that someone will activate their Autopilot™ and

remain perfectly still and attentive their entire time, because human attention

simply doesn't work like that.

Hopefully, some Tesla engineer will come up with a feature called "do not run

into the clearly-visible obstacle at full speed" in the near future. Until then,

all of you people drinking and/or sleeping in your moving cars should seriously

consider not doing that anymore.