Memories

As a software developer I constantly have to fight the urge to say "this is nonsense" whenever something new comes up. Or, to be more precise, as a software developer who would like to still find a job 10 years from now. I may still prefer virtual machines over clouds, but I also realize that keeping up to date with bad ideas is part of my job.

I've given around turns around the Sun by now to see the rise of technologies that we take for granted. Today's article is a short compilation of a couple of them.

Seat belts

When I was a child, 2-point seat belts were present in all cars but mostly as decoration. In fact, wearing your seat belt was seen as disrespectful because it signaled that you didn't trust the driver, or even worse, that you disapproved of their current driving. And they were not wrong: since most people didn't use their seat belts in general, the only reason anyone would reach out to use theirs was when they were actively worried about their safety. Drivers would typically respond with a half-joke about how they weren't that bad of a driver.

It took a lot of time and public safety campaigns to instill the idea that accidents are not always the driver's fault and that you should always use your seat belt. Note that plenty of taxi drivers in Argentina refuse to this day to use theirs, arguing that wearing a seat belt all day makes them feel strangled. Whenever they get close to the city center (where police checks are) they pull their seat belt across their chest but, crucially, they do not buckle it in. That way it looks as if they are wearing one, sparing them a fine.

Typewriters and printers

My dad decided to buy our first computer after visiting a lawyer's office. The lawyer showed my dad this new machine and explained how he no longer had to re-type every contract from scratch on a typewriter: he could now type it once, keep a copy of it (unheard of), modify two or three things, run a spell checker (even more unheard of!), and send it to the printer. No carbon paper and no corrections needed.

A couple years later my older brother went to high school at an institution oriented towards economics which included a class on shorthand and touch typing on a typewriter. Homework for the later consisted on typing five to ten lines of the same sentence over and over. As expected, the printer investment paid off.

... until one day my brother came home with bad news: he was no longer allowed to use the computer for his homework. As it turns out, schools were not super keen on the fact that you could correct your mistakes instantaneously, but electric typewriters could do that too so they let it slide. But once they found out that you could copy/paste text (something none of us knew at the time) and reduce those ten lines of exercise to a single one, that was the last straw. As a result printed pages were no longer accepted and, as reward for being early adopters, my parents had to go out and buy a typewriter for my brother to do his homework.

About two years later we changed schools, and the typewriter has been kept as a museum piece ever since.

Neighborhoods

My parents used to drink mate every day on our front yard, while my friends and I would do the same with a sweeter version (tereré). My neighbors used to do the same, meaning that we would all see each other in the afternoon. At that point kids would play with each other, adults would talk, and everyone got to know each other.

This is one of those things that got killed by modern life. In order to dedicate the evening to drink mate you need to come back from work early, something that's not a given. You also need free time meaning, on a way, that you needed a stay-at-home parent (this being the 90s that would disproportionately mean the mom). The streets were not also expected to be safe but also perceived to be safe, a feeling that went away with the advent of 24hs news cycles. Smartphones didn't help either, but this tradition was long extinct in my life by the time cellphones started playing anything more complex than snake.

Unlike other items in this list, I harbor hope that these traditions still live in smaller towns. But if you wonder why modern life is so isolated, I suggest you to make yourself a tea and go sit outside for a while.

Computers

Since this could be a book by itself, I'll limit myself to a couple anecdotes.

When Windows 3.11 came up, those who were using the command line had to learn how to use the new interface. Computer courses at the time would dedicate its first class to playing Solitaire, seeing as it was a good exercise to get your hand-eye coordination rolling. Every time I think about it I realize what a brilliant idea this was.

My first computer course consisted on me visiting a place full of computers, picking one of the floppy disks, and figure out how to run whatever that disk had. If it was a game (and I really hoped it would be a game) I then had to figure out how to run it, what the keys did, and what the purpose of the game was. There were only two I couldn't conquer at the time: a submarine game that was immune to every key I tried (it could have been 688 Attack Sub) and the first Monkey Island in English (which I didn't speak at the time). I had trouble with Maniac Mansion too until my teacher got me a copy of the codes for the locked door.

This course was the most educational experience of my life, and I've struggled to find a way to replicate it. Letting me explore at my own pace, do whatever I wanted and asking for help whenever I got stuck got me in an exploration path that I follow to this day. The hardest part is that this strategy only works with highly motivated students, which is not an easy requirement to fulfill.

How not to sell tickets

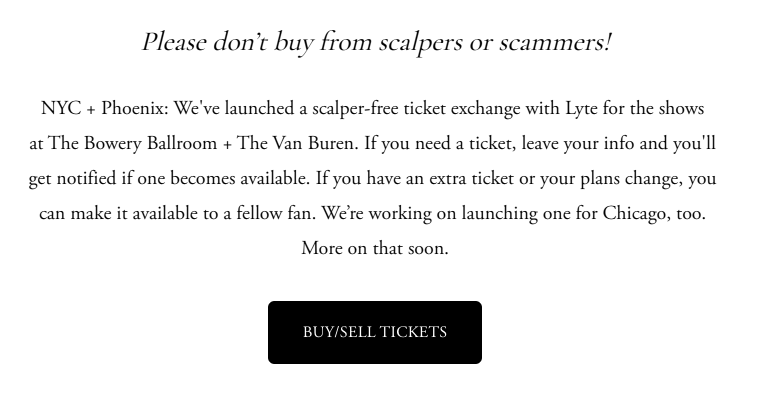

Let's say you are a band and you want to keep scalpers from buying all of your tickets and reselling them at ridiculous prices.

By now we know what works: you sell tickets with assigned names on them, and check at the door that the name on the person's ID (or, alternatively, credit card) matches the name on the ticket. You can also offer a "+1 option" for bringing someone along, but they must be accompanied by the person who bought the tickets in the first place. No ID, no entrance.

By contrast, here's what doesn't work:

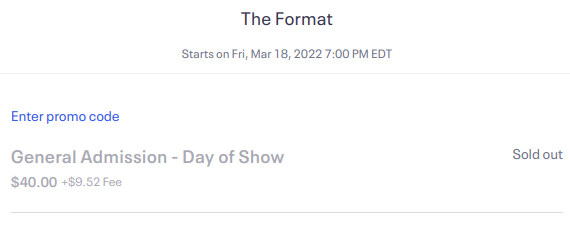

How do I know that it doesn't work? For starters, because all of the regular $40 tickets are sold out:

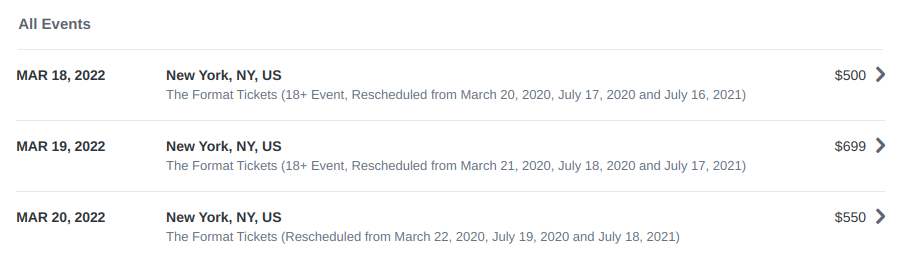

And because the only available tickets are those sold by scalpers at 10 times their value:

You know what I would do if I were a scalper? I would buy as many tickets as I could, and then I would also sign up for the exchange with as many fake addresses as possible. It doesn't matter that I can't use the exchanged tickets afterwards - as long as the supply is reduced, the value of the tickets I bought first will only go up. Since every overpriced ticket offsets the cost of the other 9 I got early, it all works out for me at the end.

By now it is well known that ticket companies don't get rid of scalpers because they don't want to. The method I mentioned in the first paragraph is proven to work, but why would ticket companies get rid of scalpers when they can reach deals with them and get a second cut on the same ticket?

Which brings me to my final point. I think there are three types of bands:

- those that care about their fans and successfully prevent scalpers from getting all the tickets,

- those that care about their fans but don't know what to do (and/or do it wrong), and

- those that don't care about scalpers because they get paid anyway (or get paid even more)

If you are a member of the first type of band I applaud you for standing up to your fans. And if you know someone who is in the second situation, feel free to forward them this post.

I could share some rough words with those who belong to the third group. But honestly, why bother? It's not like there's a shortage of good musicians. I'll go watch those instead.

April 2022 edit: John Oliver has a funny segment on this topic. You can watch it on YouTube

Brain dump

Here's a list of short thoughts that are too long for

a tweet toot but too short

for a post.

On old computer hardware

I spent the last month of my life fixing the computers of my family. That meant installing Roblox on a tablet with 1Gb of RAM, fixing antivirus on Windows 7, dealing with Alexa in Spanish, and trying to find cheap ink for printers with DRM. Fun fact: HP uses DRM to forbid you from importing ink, and then stopped delivering ink to my family's city.

Modern hardware can have a long, long life, but this won't happen if software developers don't start optimizing their code even a bit. Sure, Barry Collins may not have a problem with an OS that requires 4Gb of RAM, but I feel I speak for tens of thousands of users when I say that he doesn't know what he's talking about.

On new computer hardware

I know that everyone likes to dump on Mark Zuckerberg, and with good reason: the firm formerly known as Facebook is awful and you should stay away from everything and anything they touch. Having said that, there's a reasonable chance that the moment of VR is finally here. If you are a software developer, I encourage you to at least form an informed opinion before the VR train leaves the station.

On movies

I wasn't expecting to enjoy Ready or not as much as I did. I also wasn't expecting to enjoy a second watch of Inception almost as much as the first time, but those things happened anyway. I was however expecting to enjoy Your name, so no surprises there.

I also got on a discussion about Meat Grinder, a Thai film that is so boring and incoherent that it cured me of bad movies forever. No matter how bad a film is, my brain can always relax and say "sure, it's bad, but at least it's not Meat Grinder". I hold a similar opinion about Funny Games, a movies where even the actors on the poster seem to be ashamed of themselves. At least here I have the backing of cinema critic Mark Kermode, who called it "a really annoying experience". Take that, people from my old blog who said I was the one who didn't "get it".

On books

Michael Lewis' book Liar's Poker is not as good as The Big Short, but if you read the latter without the former you are doing yourself a disservice. I wasn't expecting to become the kind of person who shudders when reading that "the head of mortgage trading at First Boston who helped create the fist CMO, lists it (...) as the most important financial innovation of the 1980s", and yet here we are.

I really, really, really like Roger Zelazny's A night in the lonesome October, which is why I'm surprised at how little I liked his earlier, award-winning book This immortal. I mean, it's not bad, but I wouldn't have tied it with Dune as Hugo Award winner of 1966 for Best Novel. I think it will end up overtaking House of Leaves in the category of books that disappointed me the most.

And finally, I can't make any progress with Katie Hafner and Matthew Lyon's book Where wizards stay up late because every time I try to get back to it I get an irresistible urge to jump onto my computer and start programming. Looks like Masters of Doom will have to keep waiting.

On music

My favorite song that I discovered this year so far is Haunted by Poe. Looking for some music of her I learned about how thoroughly lawyers and the US music industry destroyed her career. This made me pretty angry until I read that her net worth is well into the millions of dollars, so I guess she came out fine after all. And since the album was written as a collaboration with her brother while he was writing "House of Leaves", I guess I did get something out of that book at the end.

Some thoughts on learning about computers

Here's a thought that has been rattling around in my head for the last 10 years or so.

My first word processor was WordStar, but I never got to use it fully. After all, what use does a 10 year old have for a word processor in 1994?

I then moved on to MicroCom's PC-Flow for several reasons. First and foremost, it was already installed on my PC. Either that or I pirated it early enough not to make a difference - in either case, it had the major advantage of already being there. Second, it was "graphical" - it may have been a program for flow charts, but that didn't stop me from writing some awful early attempts at writing music that are now lost to the sands of time and 5.25 floppy disks. And third, because it printed - not all of my programs played nice with my printer, but this one did. The end result being that I wrote plenty of silly texts using a program designed for flow charts.

The late 90s were a tumultuous time for my computer skills. At home I had both MS Office '97 and my all-time-favorite Ami Pro, while in high school I had to use a copy of Microsoft Works that was already old at the time. The aughts brought Linux and StarOffice, which would eventually morph into OpenOffice and LibreOffice. Shortly afterwards I also learned LaTeX, making me care about fonts and citations to a degree that I would never have imagined.

And finally, the '10s brought Google Docs and MS Office 365. But the least said about them, the better.

The point of this incomplete stroll through memory lane is to point out the following: if there's a way to mark a text as bold in a document, I've done it. Cryptic command? Check. Button in a status bar that changes places across versions? Check. Textual markers that a pre-processor will remove before generating the final file? Check. Nothing? Also check. If you ever need to figure out how some piece of software expects you to mark something in bold, I'm your man.

And precisely because this function kept jumping around and changing shape throughout my entire life, I have developed a mental model that differentiates between the objective and the way to get there. If I have a reasonable belief that a piece of software can generate bold text, I'll poke around until I find the magic incantation that achieves this objective. But my story is not everybody's story. Most regular people I know learned how to make bold text exactly once, and then they stuck to that one piece of software for as long as possible. For them, text being bold and that one single button in that one single place are indistinguishable, and if you move the button around they'll suddenly panic because someone has moved their cheese.

I have long wondered what's the long-term effect of this "quirk" in our education system. And forecasts are not looking good: according to The Verge, students nowadays are having trouble even understanding what a file is. Instead of teaching people to understand the relation between presentation and content, we have been abstracting the underlying system to the point of incomprehension. The fact that Windows 10 makes it so damn hard to select a folder makes me fear that this might be deliberate - I'm not one to think of shady men ruining entire generations in the name of profit, but it's hard to find a better explanation for this specific case.

Based on my experience learning Assembly, pointers, and debugging, I believe that the best cure to this specific disease is a top-down approach1 with pen and paper. If I were to teach an "Introduction to computers" class, I would split it in two stages: First, my students would write their intended content down, using their own hands on actual paper. They would then use highlighters to identify headers, text that should be emphasized, sections, and so on. At this stage we would only talk about content while completely ignoring presentation, in order to emphasize that...

- ... yes, you might end up using bold text both to emphasize a word and for sub-sub headers, but they mean different things.

- ... once you know what the affordances of a word processor are, all you need to figure out is where the interface has hidden them.

We would then move on to the practical part, using a word processor they have never seen. We would use this interface so the students get a rough idea of what the interface looks like in real life. And finally, my students would then go home and practice with whatever version of MS Office it's installed in their computer. If at least one of them tries to align text with multiple spaces only to feel dirty and re-do it the right way, I will consider my class a success.

Would this work? Pedagogically, I think it would. But I am painfully aware that my students would hate it. And good luck selling a computer course that doesn't interact with a computer. It occurs to me that perhaps it could be done in an interactive program, one that "unlocks" interface perks as you learn them. If I'm ever unemployed and with enough time in my hands, I'll give it a try and let you know.

Footnotes

[1] A top-down approach would be learning the concepts first and the implementation details later. Its counterpart would be bottom-up, in which you first learn how to do something and later on you learn what you did that for. Bottom-up gets your hands dirty earlier, similar to Mr. Miyagi's teaching style, while top-down keeps you from developing bad habits.

Write-only code

The compiler as we know it is generally attributed to Grace Hopper, who also popularized the notion of machine-independent programming languages and served as technical consultant in 1959 in the project that would become the COBOL programming language. The second part is not important for today's post, but not enough people know how awesome Grace Hopper was and that's unfair.

It's been at least 60 years since we moved from assembly-only code into what we now call "good software engineering practices". Sure, punching assembly code into perforated cards was a lot of fun, and you could always add comments with a pen, right there on the cardboard like well-educated cavemen and cavewomen (cavepeople?). Or, and hear me out, we could use a well-designed programming language instead with fancy features like comments, functions, modules, and even a type system if you're feeling fancy.

None of these things will make our code run faster. But I'm going to let

you into a tiny secret: the time programmers spend actually coding

pales in comparison to the time programmers spend thinking about what

their code should do. And that time is dwarfed by the time programmers

spend cursing other people who couldn't add a comment to save their

life, using variables named var and cramming lines of code as tightly

as possible because they think it's good for the environment.

The type of code that keeps other people from strangling you is what we call "good code". And we can't talk about "good code" without it's antithesis: "write-only" code. The term is used to describe languages whose syntax is, according to Wikipedia, "sufficiently dense and bizarre that any routine of significant size is too difficult to understand by other programmers and cannot be safely edited". Perl was heralded for a long time as the most popular "write-only" language, and it's hard to argue against it:

open my $fh, '<', $filename or die "error opening $filename: $!";

my $data = do { local $/; <$fh> };

This is not by far the worse when it comes to Perl, but it highlights the type of code you get when readability is put aside in favor of shorter, tighter code.

Some languages are more propense to this problem than others. The International Obfuscated C Code Contest is a prime example of the type of code that can be written when you really, really want to write something badly. And yet, I am willing to give C a pass (and even to Perl, sometimes) for a couple reasons:

- C was always supposed to be a thin layer on top of assembly, and was designed to run in computers with limited capabilities. It is a language for people who really, really need to save a couple CPU cycles, readability be damned.

- We do have good practices for writing C code. It is possible to write okay code in C, and it will run reasonably fast.

- All modern C compilers have to remain backwards compatible. While some edge cases tend to go away with newer releases, C wouldn't be C without its wildest, foot-meet-gun features, and old code still needs to work.

Modern programming languages, on the other hand, don't get such an easy pass: if they are allowed to have as many abstraction layers and RAM as they want, have no backwards compatibility to worry about, and are free to follow 60+ years of research in good practices, then it's unforgivable to introduce the type of features that lead to write-only code.

Which takes us to our first stop: Rust. Take a look at the following code:

let f = File::open("hello.txt");

let mut f = match f {

Ok(file) => file,

Err(e) => return Err(e),

};

This code is relatively simple to understand: the variable f contains

a file descriptor to the hello.txt file. The operation can either

succeed or fail. If it succeeded, you can read the file's contents by extracting

the file descriptor from Ok(file), and if it failed you can either do something

with the error e or further propagate Err(e). If you

have seen functional programming before, this concept may sound familiar

to you. But more important: this code makes sense even if you have

never programmed with Rust before.

But once we introduce the ? operator, all that clarity is thrown off

the window:

let mut f = File::open("hello.txt")?;

All the explicit error handling that we saw before is now hidden from you.

In order to save 3 lines of code, we have now put our error handling logic

behind an easy-to-overlook, hard-to-google ? symbol. It's literally there to

make the code easier to write, even if it makes it harder to read.

And let's not forget that the operator also facilitates the "hot potato" style of catching exceptions1, in which you simply... don't:

File::open("hello.txt")?.read_to_string(&mut s)?;

Python is perhaps the poster child of "readability over conciseness". The Zen of Python explicitly states, among others, that "readability counts" and that "sparser is better than dense". The Zen of Python is not only a great programming language design document, it is a great design document, period.

Which is why I'm still completely puzzled that both f-strings and the infamous walrus operator have made it into Python 3.6 and 3.8 respectively.

I can probably be convinced of adopting f-strings. At its core, they are designed to bring variables closer to where they are used, which makes sense:

"Hello, {}. You are {}.".format(name, age)

f"Hello, {name}. You are {age}."

This seems to me like a perfectly sane idea, although not one without

drawbacks. For instance, the fact that the f is both

important and easy to overlook. Or that there's no way to know what

the = here does:

some_string = "Test"

print(f"{some_string=}")

(for the record: it will print some_string='Test'). I also hate that

you can now mix variables, functions, and formatting in a way

that's almost designed to introduce subtle bugs:

print(f"Diameter {2 * r:.2f}")

But this all pales in comparison to the walrus operator, an operator designed to save one line of code2:

# Before

myvar = some_value

if my_var > 3:

print("my_var is larger than 3")

# After

if (myvar := some_value) > 3:

print("my_var is larger than 3)

And what an expensive line of code it was! In order to save one or two variables, you need a new operator that behaves unexpectedly if you forget parenthesis, has enough edge cases that even the official documentation brings them up, and led to an infamous dispute that ended up with Python's creator taking a "permanent vacation" from his role. As a bonus, it also opens the door to questions like this one, which is answered with (paraphrasing) "those two cases behave differently, but in ways you wouldn't notice".

I think software development is hard enough as it is. I cannot convince the Rust community that explicit error handling is a good thing, but I hope I can at least persuade you to really, really use these type of constructions only when they are the only alternative that makes sense.

Source code is not for machines - they are machines, and therefore they couldn't care less whether we use tabs, spaces, one operator, or ten. So let your code breath. Make the purpose of your code obvious. Life is too short to figure out whatever it is that the K programming language is trying to do.